| 26 Oct 2021 |

Dan Sun Dan Sun | In reply to@_slack_kubeflow_U02JVFFP213:matrix.org

I should add that I just downgraded to KFServing 0.6.1 and was able to test the sklearn-iris service and get a 200 response. So it seems the 404 errors are specific to 0.7.0 Which version of kubernetes you are on ? | 01:34:38 |

Dan Sun Dan Sun | In reply to@_slack_kubeflow_UFVUV2UFP:matrix.org

Which version of kubernetes you are on ? Matt Carlson 0.6.1 does not support raw kube deployment mode though | 01:35:26 |

|  Ryan Russon changed their display name from _slack_kubeflow_U02JNHXU1CN to Ryan Russon. Ryan Russon changed their display name from _slack_kubeflow_U02JNHXU1CN to Ryan Russon. | 03:01:40 |

|  Ryan Russon set a profile picture. Ryan Russon set a profile picture. | 03:01:44 |

|  _slack_kubeflow_U02KQJZHEPJ joined the room. _slack_kubeflow_U02KQJZHEPJ joined the room. | 03:55:41 |

iamlovingit iamlovingit | In reply to@_slack_kubeflow_UFVUV2UFP:matrix.org

Matt Carlson 0.6.1 does not support raw kube deployment mode though can you parse example.com correctly? | 08:32:59 |

|  _slack_kubeflow_U02JYUJ2KA9 joined the room. _slack_kubeflow_U02JYUJ2KA9 joined the room. | 09:29:30 |

|  _slack_kubeflow_U02KT0QHY8Y joined the room. _slack_kubeflow_U02KT0QHY8Y joined the room. | 14:48:20 |

Alexandre Brown Alexandre Brown |

Download image.png | 14:58:45 |

Alexandre Brown Alexandre Brown | Hello, I'm having trouble creating an InferenceService that requests a GPU.

I'm trying to deploy the flowers sample from the doc.

Kubeflow : 1.4 manifest

Error from kubectl describe :

Warning FailedScheduling 17s (x2 over 18s) default-scheduler 0/4 nodes are available: 1 Insufficient cpu, 4 Insufficient nvidia.com/gpu.

flower-inference.yaml

apiVersion: "serving.kubeflow.org/v1beta1"

kind: "InferenceService"

metadata:

name: "flowers-sample"

annotations:

autoscaling.knative.dev/target: "1"

spec:

template:

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: train-gpu

operator: In

values:

- "true"

tolerations:

- key: "train-gpu-taint"

operator: "Equal"

value: "true"

effect: "NoSchedule"

spec:

predictor:

tensorflow:

storageUri: " gs://kfserving-samples/models/tensorflow/flowers "

resources:

limits:

cpu: "1"

memory: 2Gi

nvidia.com/gpu: "1"

requests:

cpu: "1"

memory: 2Gi

nvidia.com/gpu: "1"

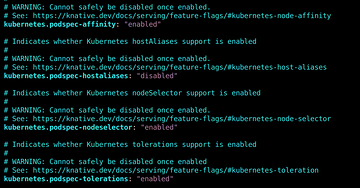

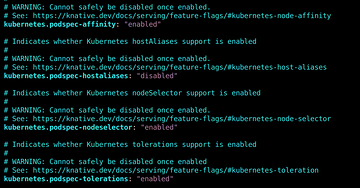

Dan Sun I did as you suggested and enabled the knative flag in the config map for the toleration and node affinity

Any help is appreciated! | 14:58:45 |

Matt Carlson Matt Carlson | In reply to@_slack_kubeflow_U0104H1616Z:matrix.org

can you parse example.com correctly? Dan Sun I'm running on k8s 1.21 on EKS. Good point about 0.6.1. I should have been more concise in my description. I created a completely new/separate install with knative integration to get a 0.6.1 deployment to test with. | 14:59:47 |

Alexandre Brown Alexandre Brown | (edited) ... affinity

Any ... => ... affinity

The node with the affinity is a p3.8xlarge instance (aws) which has 4 GPUs available, I'm only requesting 1 tho.

Any ... | 15:01:27 |

|  _slack_kubeflow_U02K3CDMJ10 joined the room. _slack_kubeflow_U02K3CDMJ10 joined the room. | 15:01:27 |

|  Midhun Nair joined the room. Midhun Nair joined the room. | 15:02:20 |

Midhun Nair Midhun Nair | In reply to@_slack_kubeflow_U02AYBVSLSK:matrix.org

Hello, I'm having trouble creating an InferenceService that requests a GPU.

I'm trying to deploy the flowers sample from the doc.

Kubeflow : 1.4 manifest

Error from kubectl describe :

Warning FailedScheduling 17s (x2 over 18s) default-scheduler 0/4 nodes are available: 1 Insufficient cpu, 4 Insufficient nvidia.com/gpu.

flower-inference.yaml

apiVersion: "serving.kubeflow.org/v1beta1"

kind: "InferenceService"

metadata:

name: "flowers-sample"

annotations:

autoscaling.knative.dev/target: "1"

spec:

template:

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: train-gpu

operator: In

values:

- "true"

tolerations:

- key: "train-gpu-taint"

operator: "Equal"

value: "true"

effect: "NoSchedule"

spec:

predictor:

tensorflow:

storageUri: " gs://kfserving-samples/models/tensorflow/flowers "

resources:

limits:

cpu: "1"

memory: 2Gi

nvidia.com/gpu: "1"

requests:

cpu: "1"

memory: 2Gi

nvidia.com/gpu: "1"

Dan Sun I did as you suggested and enabled the knative flag in the config map for the toleration and node affinity

The node with the affinity is a p3.8xlarge instance (aws) which has 4 GPUs available, I'm only requesting 1 tho.

Any help is appreciated! Hey Alexandre. Seems like the error is more of a autoscaling/cluster issue. Did you check your cluster autoscaler? Is it up and running? I had once faced this and couldn't find what was causing it until i found that the cluster autoscaler pod was down. | 15:02:20 |

Benjamin Tan Benjamin Tan | In reply to@_slack_kubeflow_U01G6CYC5M1:matrix.org

Hey Alexandre. Seems like the error is more of a autoscaling/cluster issue. Did you check your cluster autoscaler? Is it up and running? I had once faced this and couldn't find what was causing it until i found that the cluster autoscaler pod was down. Is something using the GPU? | 15:02:38 |

Alexandre Brown Alexandre Brown | In reply to@_slack_kubeflow_UM56LA7N3:matrix.org

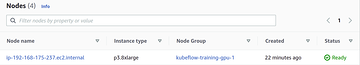

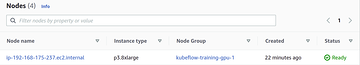

Is something using the GPU? I am not using autoscaling from 0 this is just a test cluster and nothing is using the GPU. Actually the GPU node couldnt be created initially when deploying the cluster due to zone availability, I manually added a new node to the cluster via AWS UI.

One thing strikes me tho, I do not see nvidia resource when doing node describe, is it normal? Should I not see it?

kubectl describe node ip-192-168-175-237.ec2.internal

Name: ip-192-168-175-237.ec2.internal

Roles: none

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/instance-type=p3.8xlarge

beta.kubernetes.io/os=linux

eks.amazonaws.com/capacityType=ON_DEMAND

eks.amazonaws.com/nodegroup=kubeflow-training-gpu-1

eks.amazonaws.com/nodegroup-image=ami-0254f335ce9e17a97

failure-domain.beta.kubernetes.io/region=us-east-1

failure-domain.beta.kubernetes.io/zone=us-east-1b

kubernetes.io/arch=amd64

kubernetes.io/hostname=ip-192-168-175-237.ec2.internal

kubernetes.io/os=linux

node.kubernetes.io/instance-type=p3.8xlarge

topology.kubernetes.io/region=us-east-1

topology.kubernetes.io/zone=us-east-1b

train-gpu=true

Annotations: node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Tue, 26 Oct 2021 10:45:46 -0400

Taints: train-gpu-taint=true:NoSchedule

Unschedulable: false

Lease:

HolderIdentity: ip-192-168-175-237.ec2.internal

AcquireTime: unset

RenewTime: Tue, 26 Oct 2021 11:03:48 -0400

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Tue, 26 Oct 2021 11:03:02 -0400 Tue, 26 Oct 2021 10:45:43 -0400 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Tue, 26 Oct 2021 11:03:02 -0400 Tue, 26 Oct 2021 10:45:43 -0400 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Tue, 26 Oct 2021 11:03:02 -0400 Tue, 26 Oct 2021 10:45:43 -0400 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Tue, 26 Oct 2021 11:03:02 -0400 Tue, 26 Oct 2021 10:47:57 -0400 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.175.237

Hostname: ip-192-168-175-237.ec2.internal

InternalDNS: ip-192-168-175-237.ec2.internal

Capacity:

attachable-volumes-aws-ebs: 39

cpu: 32

ephemeral-storage: 20959212Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 251742828Ki

pods: 234

Allocatable:

attachable-volumes-aws-ebs: 39

cpu: 31850m

ephemeral-storage: 18242267924

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 248743532Ki

pods: 234

System Info:

Machine ID: 8cc18ddb255d4adebc72750ccfe15e92

System UUID: EC281CA2-4409-2E43-CF11-CEFC832E37CC

Boot ID: d54b6a48-1a3a-4e29-a730-6730b6ee38b3

Kernel Version: 4.14.248-189.473.amzn2.x86_64

OS Image: Amazon Linux 2

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://20.10.7

Kubelet Version: v1.18.20-eks-c9f1ce

Kube-Proxy Version: v1.18.20-eks-c9f1ce

ProviderID: aws:///us-east-1b/i-0cd69fa5348fb062d

Non-terminated Pods: (2 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system aws-node-7svm2 10m (0%) 0 (0%) 0 (0%) 0 (0%) 18m

kube-system kube-proxy-gjdkp 100m (0%) 0 (0%) 0 (0%) 0 (0%) 18m

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 110m (0%) 0 (0%)

memory 0 (0%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

attachable-volumes-aws-ebs 0 0

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Starting 18m kubelet Starting kubelet.

Normal NodeHasSufficientMemory 18m (x2 over 18m) kubelet Node ip-192-168-175-237.ec2.internal status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 18m (x2 over 18m) kubelet Node ip-192-168-175-237.ec2.internal status is now: NodeHasNoDiskPressure

Normal NodeHasSufficientPID 18m (x2 over 18m) kubelet Node ip-192-168-175-237.ec2.internal status is now: NodeHasSufficientPID

Normal NodeAllocatableEnforced 18m kubelet Updated Node Allocatable limit across pods

Normal Starting 16m kube-proxy Starting kube-proxy.

Normal NodeReady 15m kubelet Node ip-192-168-175-237.ec2.internal status is now: NodeReady | 15:06:33 |

Matt Carlson Matt Carlson | In reply to@_slack_kubeflow_U02JVFFP213:matrix.org

Dan Sun I'm running on k8s 1.21 on EKS. Good point about 0.6.1. I should have been more concise in my description. I created a completely new/separate install with knative integration to get a 0.6.1 deployment to test with. iamlovingit Apologies, but I don't quite understand your question. | 15:06:55 |

Alexandre Brown Alexandre Brown |

Download image.png | 15:07:36 |

Alexandre Brown Alexandre Brown | In reply to@_slack_kubeflow_U02AYBVSLSK:matrix.org

I am not using autoscaling from 0 this is just a test cluster and nothing is using the GPU. Actually the GPU node couldnt be created initially when deploying the cluster due to zone availability, I manually added a new node to the cluster via AWS UI.

One thing strikes me tho, I do not see nvidia resource when doing node describe, is it normal? Should I not see it?

kubectl describe node ip-192-168-175-237.ec2.internal

Name: ip-192-168-175-237.ec2.internal

Roles: none

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/instance-type=p3.8xlarge

beta.kubernetes.io/os=linux

eks.amazonaws.com/capacityType=ON_DEMAND

eks.amazonaws.com/nodegroup=kubeflow-training-gpu-1

eks.amazonaws.com/nodegroup-image=ami-0254f335ce9e17a97

failure-domain.beta.kubernetes.io/region=us-east-1

failure-domain.beta.kubernetes.io/zone=us-east-1b

kubernetes.io/arch=amd64

kubernetes.io/hostname=ip-192-168-175-237.ec2.internal

kubernetes.io/os=linux

node.kubernetes.io/instance-type=p3.8xlarge

topology.kubernetes.io/region=us-east-1

topology.kubernetes.io/zone=us-east-1b

train-gpu=true

Annotations: node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Tue, 26 Oct 2021 10:45:46 -0400

Taints: train-gpu-taint=true:NoSchedule

Unschedulable: false

Lease:

HolderIdentity: ip-192-168-175-237.ec2.internal

AcquireTime: unset

RenewTime: Tue, 26 Oct 2021 11:03:48 -0400

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Tue, 26 Oct 2021 11:03:02 -0400 Tue, 26 Oct 2021 10:45:43 -0400 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Tue, 26 Oct 2021 11:03:02 -0400 Tue, 26 Oct 2021 10:45:43 -0400 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Tue, 26 Oct 2021 11:03:02 -0400 Tue, 26 Oct 2021 10:45:43 -0400 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Tue, 26 Oct 2021 11:03:02 -0400 Tue, 26 Oct 2021 10:47:57 -0400 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.175.237

Hostname: ip-192-168-175-237.ec2.internal

InternalDNS: ip-192-168-175-237.ec2.internal

Capacity:

attachable-volumes-aws-ebs: 39

cpu: 32

ephemeral-storage: 20959212Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 251742828Ki

pods: 234

Allocatable:

attachable-volumes-aws-ebs: 39

cpu: 31850m

ephemeral-storage: 18242267924

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 248743532Ki

pods: 234

System Info:

Machine ID: 8cc18ddb255d4adebc72750ccfe15e92

System UUID: EC281CA2-4409-2E43-CF11-CEFC832E37CC

Boot ID: d54b6a48-1a3a-4e29-a730-6730b6ee38b3

Kernel Version: 4.14.248-189.473.amzn2.x86_64

OS Image: Amazon Linux 2

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://20.10.7

Kubelet Version: v1.18.20-eks-c9f1ce

Kube-Proxy Version: v1.18.20-eks-c9f1ce

ProviderID: aws:///us-east-1b/i-0cd69fa5348fb062d

Non-terminated Pods: (2 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system aws-node-7svm2 10m (0%) 0 (0%) 0 (0%) 0 (0%) 18m

kube-system kube-proxy-gjdkp 100m (0%) 0 (0%) 0 (0%) 0 (0%) 18m

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 110m (0%) 0 (0%)

memory 0 (0%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

attachable-volumes-aws-ebs 0 0

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Starting 18m kubelet Starting kubelet.

Normal NodeHasSufficientMemory 18m (x2 over 18m) kubelet Node ip-192-168-175-237.ec2.internal status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 18m (x2 over 18m) kubelet Node ip-192-168-175-237.ec2.internal status is now: NodeHasNoDiskPressure

Normal NodeHasSufficientPID 18m (x2 over 18m) kubelet Node ip-192-168-175-237.ec2.internal status is now: NodeHasSufficientPID

Normal NodeAllocatableEnforced 18m kubelet Updated Node Allocatable limit across pods

Normal Starting 16m kube-proxy Starting kube-proxy.

Normal NodeReady 15m kubelet Node ip-192-168-175-237.ec2.internal status is now: NodeReady kubectl create -f https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v0.9.0/nvidia-device-plugin.yml

Error from server (AlreadyExists): error when creating "https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v0.9.0/nvidia-device-plugin.yml": daemonsets.apps "nvidia-device-plugin-daemonset" already exists | 15:09:49 |

Benjamin Tan Benjamin Tan | In reply to@_slack_kubeflow_U02AYBVSLSK:matrix.org

kubectl create -f https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v0.9.0/nvidia-device-plugin.yml

Error from server (AlreadyExists): error when creating "https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v0.9.0/nvidia-device-plugin.yml": daemonsets.apps "nvidia-device-plugin-daemonset" already exists Hmmm. I recall that's a cuda image that lets you do nvidia-smi | 15:11:03 |

Benjamin Tan Benjamin Tan | In reply to@_slack_kubeflow_UM56LA7N3:matrix.org

Hmmm. I recall that's a cuda image that lets you do nvidia-smi Check the logs on the nvidia pod | 15:11:26 |

Benjamin Tan Benjamin Tan | In reply to@_slack_kubeflow_UM56LA7N3:matrix.org

Check the logs on the nvidia pod that usually gives a pretty good indication when something is wrong | 15:11:43 |

Alexandre Brown Alexandre Brown | In reply to@_slack_kubeflow_UM56LA7N3:matrix.org

that usually gives a pretty good indication when something is wrong Benjamin Tan The nvidia plugin pod ? | 15:14:04 |

Benjamin Tan Benjamin Tan | In reply to@_slack_kubeflow_U02AYBVSLSK:matrix.org

Benjamin Tan The nvidia plugin pod ? yeah I think so | 15:14:33 |

Benjamin Tan Benjamin Tan | In reply to@_slack_kubeflow_UM56LA7N3:matrix.org

yeah I think so there should only be one if I recall | 15:14:43 |

Alexandre Brown Alexandre Brown | In reply to@_slack_kubeflow_UM56LA7N3:matrix.org

there should only be one if I recall I have 3, no error in logs... Maybe I should try to redeploy | 15:16:04 |

Benjamin Tan Benjamin Tan | In reply to@_slack_kubeflow_U02AYBVSLSK:matrix.org

I have 3, no error in logs... Maybe I should try to redeploy what do the logs say? | 15:16:16 |

Alexandre Brown Alexandre Brown | Redacted or Malformed Event | 15:16:56 |

Alexandre Brown Alexandre Brown | In reply to@_slack_kubeflow_U02AYBVSLSK:matrix.org

Normal Scheduled 110s default-scheduler Successfully assigned kube-system/nvidia-device-plugin-daemonset-rbvh9 to ip-192-168-175-237.ec2.internal

Normal Pulling 109s kubelet Pulling image "nvcr.io/nvidia/k8s-device-plugin:v0.9.0"

Normal Pulled 104s kubelet Successfully pulled image "nvcr.io/nvidia/k8s-device-plugin:v0.9.0"

Normal Created 102s kubelet Created container nvidia-device-plugin-ctr

Normal Started 101s kubelet Started container nvidia-device-plugin-ctr | 15:17:02 |

Dan Sun

Dan Sun Dan Sun

Dan Sun Ryan Russon changed their display name from _slack_kubeflow_U02JNHXU1CN to Ryan Russon.

Ryan Russon changed their display name from _slack_kubeflow_U02JNHXU1CN to Ryan Russon. Ryan Russon set a profile picture.

Ryan Russon set a profile picture. iamlovingit

iamlovingit _slack_kubeflow_U02JYUJ2KA9 joined the room.

_slack_kubeflow_U02JYUJ2KA9 joined the room. Alexandre Brown

Alexandre Brown

Alexandre Brown

Alexandre Brown Matt Carlson

Matt Carlson Alexandre Brown

Alexandre Brown Midhun Nair joined the room.

Midhun Nair joined the room. Midhun Nair

Midhun Nair Benjamin Tan

Benjamin Tan Alexandre Brown

Alexandre Brown Matt Carlson

Matt Carlson Alexandre Brown

Alexandre Brown

Alexandre Brown

Alexandre Brown Benjamin Tan

Benjamin Tan Benjamin Tan

Benjamin Tan Benjamin Tan

Benjamin Tan Alexandre Brown

Alexandre Brown Benjamin Tan

Benjamin Tan Benjamin Tan

Benjamin Tan Alexandre Brown

Alexandre Brown Benjamin Tan

Benjamin Tan Alexandre Brown

Alexandre Brown Alexandre Brown

Alexandre Brown